Here is an example of how to create a table with a CLOB:

Some common operations on CLOB's:

Writing to a CLOB:

Substr:

Finding the length of a CLOB:

Some caveats when dealing with LOB's:

- LOBs are not allowed in GROUP BY, ORDER BY, SELECT DISTINCT, aggregates and JOINS

- You cannot alter a table to add a CLOB column after creation. CLOB's have to be created at table creation time

- The Oracle SQL parser can only handle string literals up to 4000 characters in length

Dealing with CLOB’s in Java using Spring Framework:

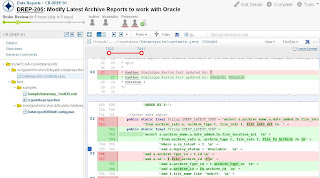

The code below shows the basic parts of how to read and write to a CLOB using Spring 2.x.

There are also other options like using a MappingSqlQuery to read from the CLOB.

Reference:

Short examples from ASKTOM

Handling CLOBs - Made easy with Oracle JDBC